Sometimes I Wonder if the Machines Are Watching Us Build Them

In our latest opinion piece, we reflect on the rise of autonomous AI coding tools and what it means for trust, control, and the future of embedded systems.

OPINION

David Kim

5/26/20253 분 읽기

Sometimes I wonder if the machines are watching us build them.

Not in a sci-fi, judgmental way, but more like quietly observing apprentices, learning from our habits, workflows, and assumptions. Waiting. Adapting. Getting better — faster than we ever imagined.

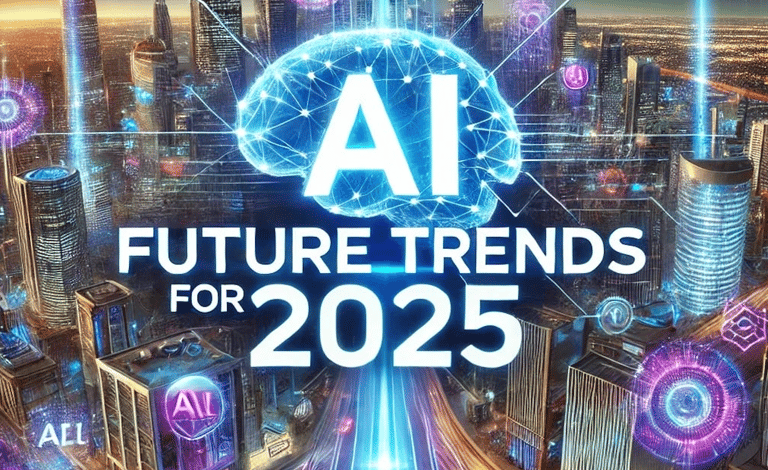

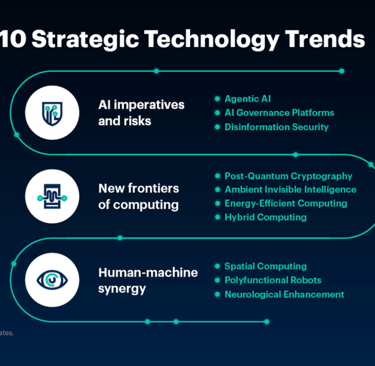

This week, I revisited Gartner’s 2025 Strategic Technology Trends. Among the standout themes?

• AI-augmented development

• Machine customers

• Autonomic systems

• Platform engineering

None of these are futuristic anymore. They’re happening right now, and unfolding much faster than most people realize.

The story that really stuck with me came out of Microsoft and Google: AI agents are no longer just code copilots. They’re collaborative engineers.

They don’t just suggest lines of code, they’re building entire features, testing modules, optimizing APIs, and handling logical flow.

If this doesn’t make you pause, it should. Not because it’s threatening, but because it’s profound.

We’re not just creating tools, we’re delegating work. Real, high-value, decision-heavy work.

At ArbaLabs, this trend is more than a curiosity, it’s a direct signal. Our focus has always been on embedded systems, edge intelligence, and trustworthy AI. When AI starts coding its own logic into those systems, the question isn’t “can it?” but “should it?”

While the rest of the ArbaLabs team is currently in Europe, exploring deep tech collaboration opportunities, I’ve been thinking about what this shift means for our broader mission, and for the people building the infrastructure of tomorrow.

Here are a few reflections I wanted to share:

⸻

1. We’re Shifting from Control to Coordination

Gartner calls it “autonomic systems” - self-managing physical or software systems that learn and adapt.

In practice, that means engineers are no longer fully in control of their stack. They’re guiding, prompting, and supervising - not hand-coding every detail.

This requires a mindset shift, especially in safety-critical domains.

⸻

2. Trust is the New Bottleneck

Speed isn’t our biggest risk - misplaced trust is.

As AI agents gain autonomy, their ability to make silent decisions increases. Who verifies the logic? Who signs off when machine-written code updates itself during operations in space or autonomous flight?

This is why data integrity, auditability, and traceability - core values at ArbaLabs - matter more than ever.

⸻

3. Developers Are Becoming System Architects

Instead of writing every loop, developers are becoming orchestrators - designing frameworks that interact with agents, APIs, and autonomic feedback loops.

It’s less about “code ownership” and more about governance, responsibility, and observability

The Gartner 2025 roadmap reads like a to-do list for any serious deep tech team. And at ArbaLabs, we’re paying close attention - not because we’re chasing trends, but because our work depends on staying grounded in them.

This blog is a space where I’ll share more of these reflections - tracking the shifts, raising questions, and sharing what we’re seeing from the field.

The future isn’t just being built.

It’s being delegated - and we’re the ones writing the handoff instructions.

David Kim

📩 david@arbalabs.com

🌐 arbalabs.com

#ArbaLabs #DavidKim #ArbaLabsInsights #ArbaLabsBlog #DeepTech #AITrends #AIinSoftwareDevelopment #AutonomousAI #AIProductivity #AutonomicSystems #AIInfrastructure

About Author:

David is a global tech analyst and storyteller exploring how frontier technologies like edge AI and blockchain are shaping our collective future. At ArbaLabs, he curates insights, trends, and conversations that bridge innovation with society. His focus: making complex ideas accessible and inspiring curiosity across sectors.